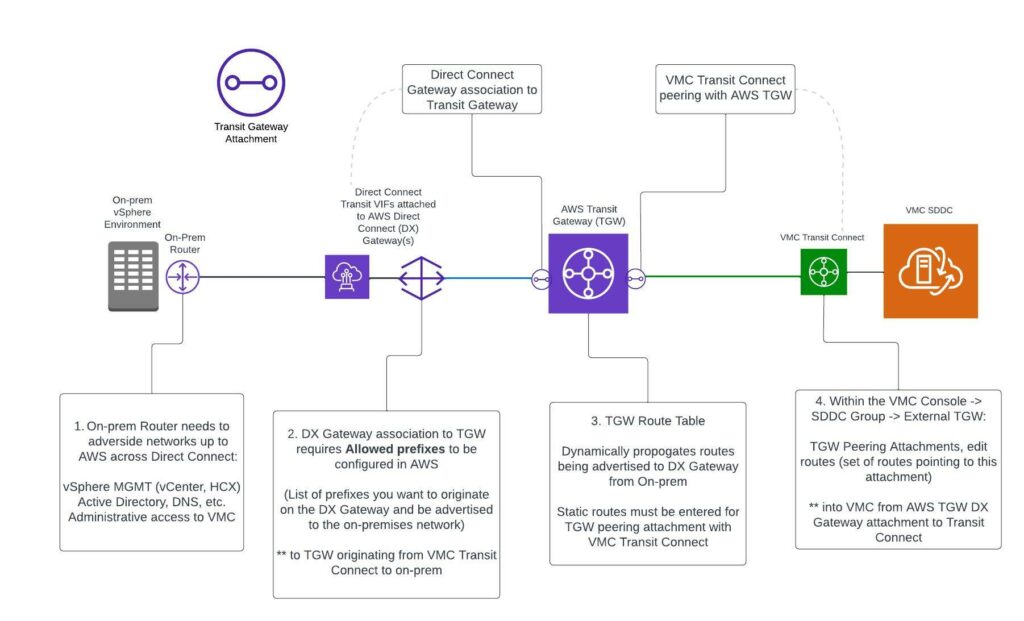

Anyone who is currently running or has deployed VMware Cloud on AWS (VMC) will tell you that hybrid cloud networking adds the most complexity. It does make sense, the VMware aspect of VMC is frankly pretty easy. Getting access to a VMware SDDC in any public cloud provider typically takes about 10 – 15 minutes of actual work and maybe 2 hrs of watching a status bar until you have a usable VMware cluster running in the cloud.

Getting different data centers to be able to talk to each other? That is where the “fun” begins, and also where many customers finally realize that VMC is really just another data center. VMC connects to one AWS VPC by default, but if you have plans to do any kind of multi-account AWS native stuff, or things like third party traffic inspection or SDWAN, AWS Transit Gateway (TGW) is going to be your best friend.

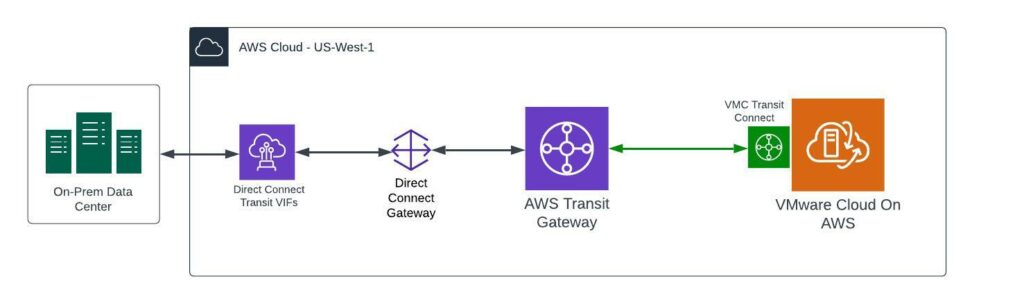

For any organization with a mature AWS hub and spoke network design using TGW this may be a piece of cake, but a good number of customers use VMC as a stepping stone into AWS native. The first considerations for VMC usually involve creating hybrid connectivity between VMC and some kind of on-prem environment. Having dealt with many customers who must dive into AWS head first for this, I decided to map out the specifics of how to use Transit Connect and TGW to connect VMC to an on-prem environment. Hopefully this will make things a little easier for anyone who needs the end-to-end view of this network architecture and how to configure it along the way.

What is Transit Connect?

In the early days of VMC, creating a hub a spoke network with an AWS TGW required creating VPN connections between VMC and TGW … because BGP. That’s my non Network Engineer way of explaining it. More recently, VMware released Transit Connect, which is essentially an AWS TGW that VMware manages for customers as part of the VMC service. It works just like an AWS TGW, it is just managed from within the VMC Cloud Console as part of a SDDC Group. Even more recently, VMware made it extremely simple to peer a VMC Transit Connect with a customer managed AWS TGW.

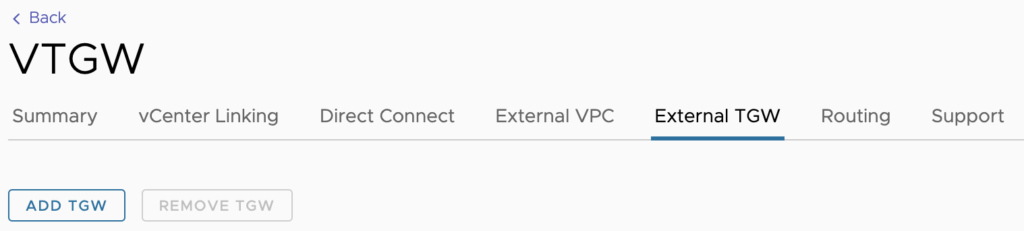

From within the VMC Console, you select External TGW and by entering an AWS account number, TGW ID, AWS region, and BGP route, you will create a TGW peering request which can be accepted within AWS for the specified TGW. This makes for a quick and easy way to peer VMC directly with an AWS TGW. All this is just one piece of the hybrid connectivity puzzle that includes AWS Direct Connect (DX) and all the BGP routing that needs to take place along the way to allow VMC to talk to an on-prem data center.

First Hop: On-Prem

This is where you have the most control over what will be allowed across the hybrid link to VMC from an on-prem environment. Some type of gateway device typically resides on-prem that acts as the connection point for AWS DX into a private data center. This device is a black box to me, but network admins typically have a good grasp on BGP routing within their environment and how to configure this device to advertise the proper on-prem networks across the DX link. This is the first configuration point in the route from on-prem to VMC.

Second Hop: AWS DX Gateway

With this type of AWS network architecture utilizing DX, customers will need a DX Transit Virtual Interface (VIF) attached to an AWS DX Gateway. A DX Gateway is a globally available and distributed network resource in AWS that can be associated with VPCs or TGWs. It is kind of like a global DX junction point that more easily allows for dynamic routing in many different types of scenarios. In this case, we are connecting to a TGW, which requires the use of a DX Transit VIF. This does come with some limitations on the AWS side in that a single DX Gateway can only have Private VIFs OR Transit VIFs attached, not both types at the same time. Also, a DX connection is only capable of providing ONE Transit VIF per DX with a minimum 1 Gbps required to do so.

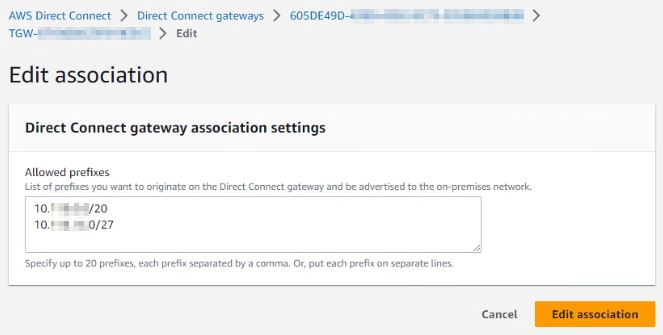

Once a Transit VIF is attached to a DX Gateway, that DX Gateway can be configured in AWS to allow networks originating from the public cloud to be advertised back to the on-prem data center. In this case with VMC, that would typically be the management network, any compute networks you intend to allow for hybrid connectivity (think AD/DNS) and possibly HCX.

Third Hop: AWS TGW

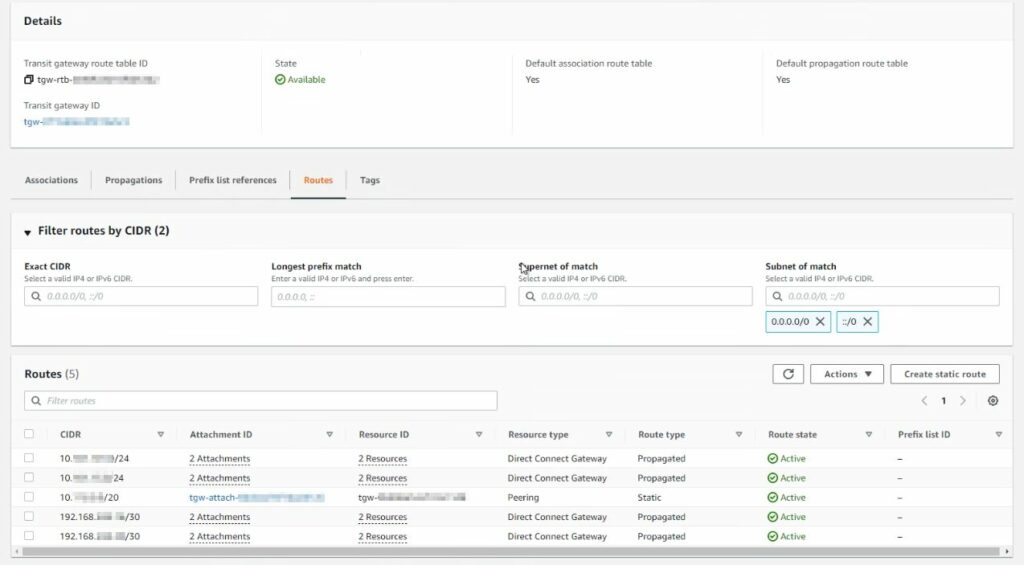

An AWS DX Gateway can be associated with an AWS TGW. In this architecture, the AWS TGW is acting as the network “hub” within AWS. It is a way to attach different resources, whether they are VPCs, VPNs, other TGWs or DX Gateways and control the routing between all of the different resources that are attached to it. In this architecture, we are associating the DX Gateway (and corresponding Transit VIF for on-prem connectivity) to the same AWS TGW that was earlier peered with VMC Transit Connect.

Once the TGW is both peered with Transit Connect and associated to the DX Gateway, the “plumbing” of the hybrid cloud connection is all in place. Proper BGP routing across the entire chain is also required to allow network communication. In the case of TGW, that means statically configuring the TGW route table for any VMC networks to route via the Transit Connect attachment ID, which appears within AWS as a TGW Attachment ID. The on-prem networks should automatically appear in this route table as propagated routes due to the automation provided by AWS from the DX Gateway association with TGW.

Fourth (and Final) Hop: VMC Transit Connect

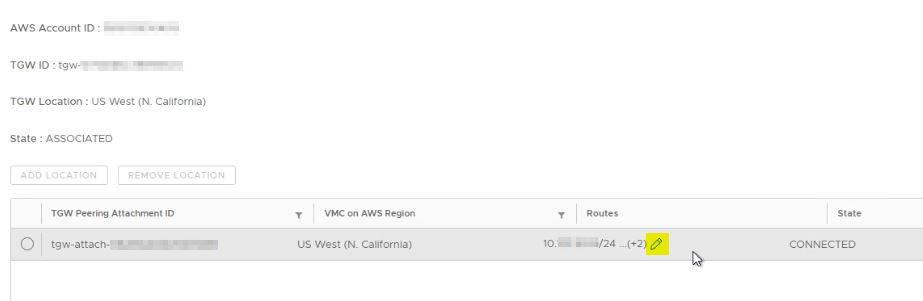

At this point in the chain, Transit Connect is already peered with the AWS TGW. The only thing to be aware of from a configuration standpoint is the routing. VMC will by default advertise ALL of the networks that originate from it (Management, Compute, HCX, etc.) In the Transit Connect -> External TGW menu within the VMC Cloud Console is the ability to edit the routes for this peering with TGW.

Clicking the pencil / edit icon will pop up a window to edit the routes pointing to (allowed in from on-prem via TGW) VMC. In some cases this could simply be all RFC 1918 networks if the intent is to allow all private connectivity from a routing standpoint. There are still gateway firewalls and other ways to further lock that traffic down from a security perspective. This may also be more specific routes as to ensure that no network overlaps occur.

With all this built and configured for the proper BGP routing, the glory of hybrid cloud connectivity is achieved! Transit Connect and the ability to peer with AWS TGW makes one part of this super simple, the big key points to remember are 1) all the other services (DX Transit VIF, Gateway, TGW) and network architecture required and 2) the places within those services to configure/control routing across the connection.