Multi-account landing zones have been the defacto standard for how to architect environments from a governance and compliance standpoint in AWS. Control Tower is an AWS service that was released in 2019, enabling customers to quickly and easily deploy the framework for a well-architected landing zone . More recently, AWS released Landing Zone Accelerator (LZA), which utilizes Control Tower but additionally deploys components to help customers further align with AWS best practices. AWS LZA also comes with industry specific customizations for healthcare, finance, education, etc.

Control Tower is a great way to get started with an AWS landing zone, but by no means does a basic deployment simply check off the “we have a landing zone!” box. There is additional customer specific design and customization required to operationalize a landing zone. AWS LZA adds another layer of customization and could be considered the ideal way to get started for new landing zone deployments. For existing Control Tower based landing zones, it is possible to “retrofit” LZA over top and take advantage of newer functionality.

I spent some time going through the process of running LZA in an existing Control Tower lab environment to see how this actually worked out in the real world and what benefits could be realized by deploying LZA in a brown-field environment. The AWS documentation on this process was … limited. Hopefully my trial and (lots of) error from this deployment can help others who may be looking for info on how to successfully navigate this process.

Getting Started with AWS LZA

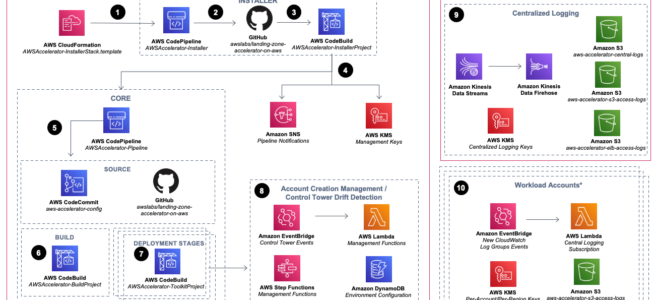

AWS provides great documentation overall on the default LZA architecture and deployment process. The first important thing to note is a list of prerequisites which include creating a GitHub personal access token to be stored in AWS Secrets Manager. After reviewing the prereqs, it is beneficial to get familiar with the architecture that is deployed by LZA:

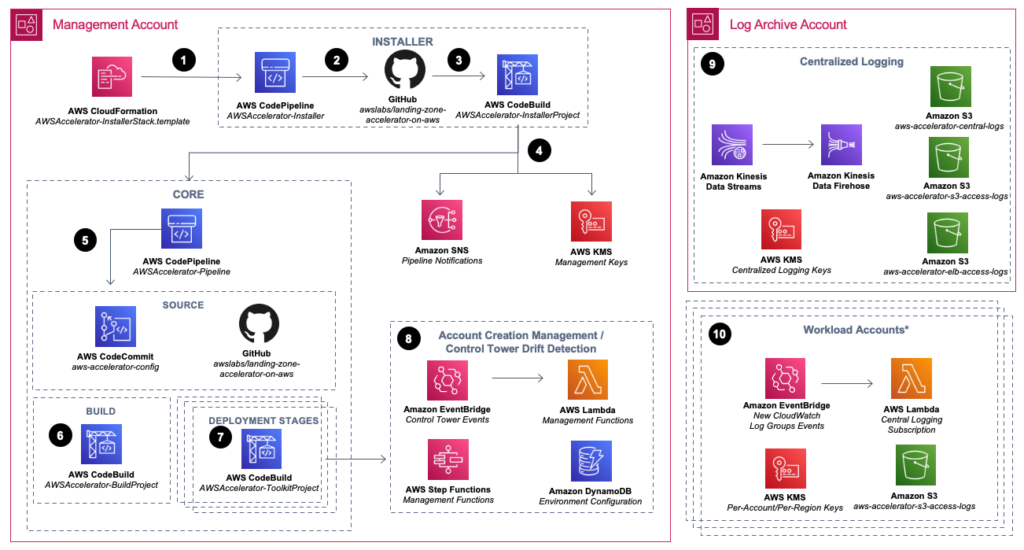

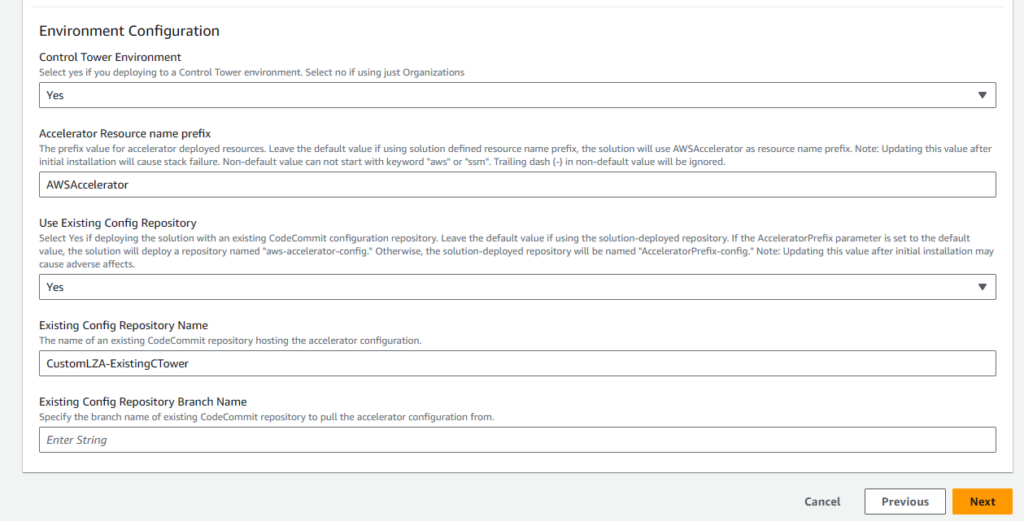

The first step in kicking off this process involves using a CloudFormation template to create a stack that builds a number of things, including a CodePipeline named AWSAccelerator-Installer (Icon #2 in the arch diag). The CloudFormation stack parameters required some customization for my existing Control Tower env. The source GitHub repository is pre-populated with the the GitHub repo location of the AWS LZA code. I had to manually enter the Mandatory Account email addresses with the root accounts (Management, Audit, Log Archive) that had already been created by Control Tower.

I also changed the default value under Use Existing Config Repository:

I did this because early on after cloning the AWS LZA GitHub repo locally and looking through the README section, I realized that LZA had a reference folder with a number of sample configurations. LZA does read from a number of configuration files to initially deploy the organization, but given my existing organization I knew the standard default config wouldn’t work. To overcome this, I grabbed the basic sample config folder, customized the contents based on my existing env and dropped the custom config into a CodeCommit repo that I created to have that info available during the initial run. Maybe there is a different way to do it by altering the source GitHub repo to be something custom that has non-default config files off the bat, but this made the most sense to me at the time.

Launch LZA CloudFormation Stack

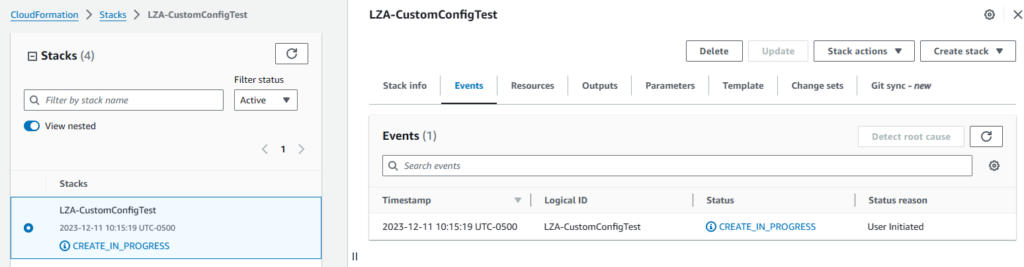

Upon the initial launch of the stack, I immediately ran into my first error due to not including the Existing Config Repository Branch Name parameter. The stack couldn’t fully delete itself after the run, so I had to manually delete an item and then delete / re-run the stack with “main” listed as the branch name. Quick and easy.

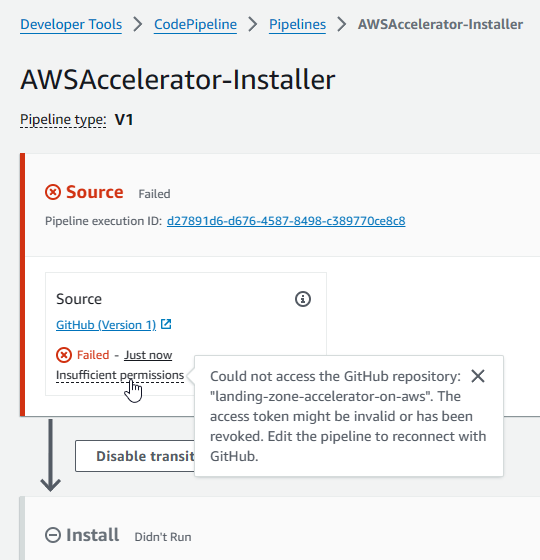

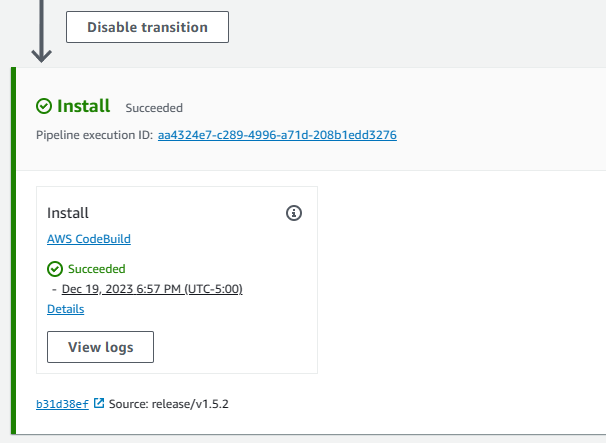

The stack ran successfully, which built and triggered the first run of the AWSAccelerator-Installer CodePipeline. The installer pipeline consists of two stages, Source and Install. I quickly ran up against error number two in the first stage:

I don’t use CodePipeline all that often, so there is probably an easy way to proactively sync GitHub creds. AWS made it easy enough to click in and process the GitHub OAuth request to get past this stage error. I did have to point it back to the correct GitHub repo and branch name to complete the request. After fixing this error the installer pipeline completed successfully, which led to the creation of a new CodePipeline object named AWSAccelerator-Pipeline.

Running the AWS Accelerator Pipeline

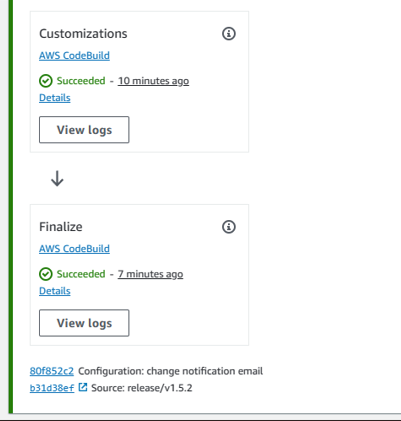

Once the AWSAccelerator-Pipeline execution started, I began an iterative trial and error process of fixing pipeline failures and stage re-execution. This pipeline consists of ten stages: Source -> Build -> Prepare -> Accounts -> Bootstrap -> Review -> Logging -> Organization -> SecurityAudit -> Deploy.

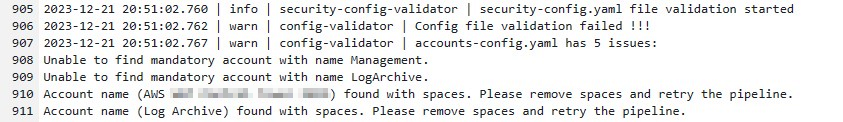

I ran into the same GitHub permissions error in the Source stage like I did during the installer pipeline, and after the quick fix mentioned above led to the next error during the Build stage:

This is the first spot where I realized the brown-field deployment process can get pretty murky. The configuration files mentioned earlier require account names and root account email addresses. I simply put in the existing account names into these configuration files, which didn’t necessarily line up with exactly what the LZA installer expects. For example, my existing Control Tower management account wasn’t named “Management” so I changed the name in the config to reflect the reality in my existing env. In this case I had to back track and just leave the default account names that were already in the config.

I also learned that the config file doesn’t accept spaces in the account names. So even though a default Control Tower deployment creates a “Log Archive” account, the LZA config files expect to see the name as “LogArchive.” At the end of the day, matching the names to the existing env doesn’t really matter, as long as the config files have the root account emails to reference the specific accounts in use regardless of their name.

At some point later I ran into this same situation where I had an account within the Control Tower env called Security but the LZA config files reference an account named “SharedServices” that basically had the same purpose as my existing Security account. Not worrying about actual names and just referencing the proper root account email to match the intent of the LZA config names with the correct accounts that exist in the env fixes that issue.

Once the Build stage completed successfully, I was overly optimistic in thinking that I was in the clear when I ran into an error during the Prepare stage:

I didn’t have anything super specific to go by, other than in my existing Control Tower env I had applied some non-mandatory controls to some of the OUs in the organization. I went ahead and removed all the non-mandatory controls and anything that had been done beyond the default from an initial Control Tower deployment. Considering that this brown-field env was a fairly basic lab, I feel like this is where customers with a more complex Control Tower env could run into pitfalls when attempting to retrofit LZA.

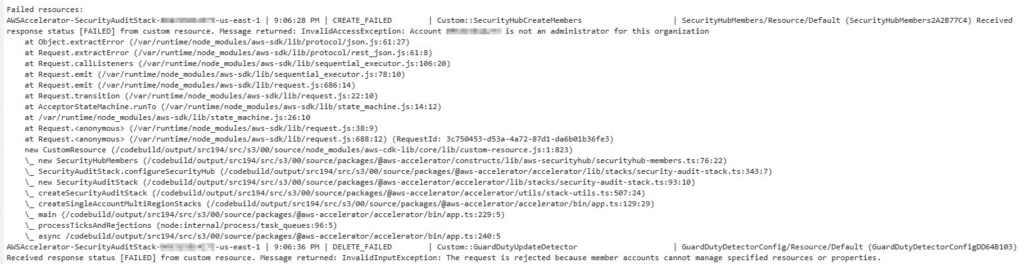

After removing extra controls, this stage of the pipeline complete with no further error. Later I ran into another error where my delegated admin account for security tooling was already created in a manually created Security account, but LZA expects this to be in the Audit account. The CloudFormation templates could not automatically remove these settings, so I had to manually remove existing delegated admin accounts, manually delete the CloudFormation stack that particular stage had created and reconfigure delegated admin for Security Hub, Guard Duty, Macie and IAM Access Analyzer to use the Audit account.

Overall it took eleven runs of this pipeline with various errors before I finally got a successfully completed full pipeline run!

While running LZA over top an existing brown-field Control Tower env is possible, it took me lots of trial and error to make it through. I couldn’t find much info out there and the AWS documentation isn’t explicit enough to make this foolproof. Digging further through the GitHub repo, I noticed that some of the reference folders had subfolders called images which were super helpful and showed AWS design diagrams with more explicit detail that I hadn’t seen anywhere else.

I ran this against a fairly vanilla Control Tower env and I suspect that a larger, more customized env could run into more bumps in the road than I did. With some planning, time and LOTS of patience you should be able to add the benefits of LZA to pre-existing Control Tower environment.

What specifically are those benefits? I’ll take a look at those in the next blog post…